machine learning - How to make custom code in python utilize GPU while using Pytorch tensors and matrice functions - Stack Overflow

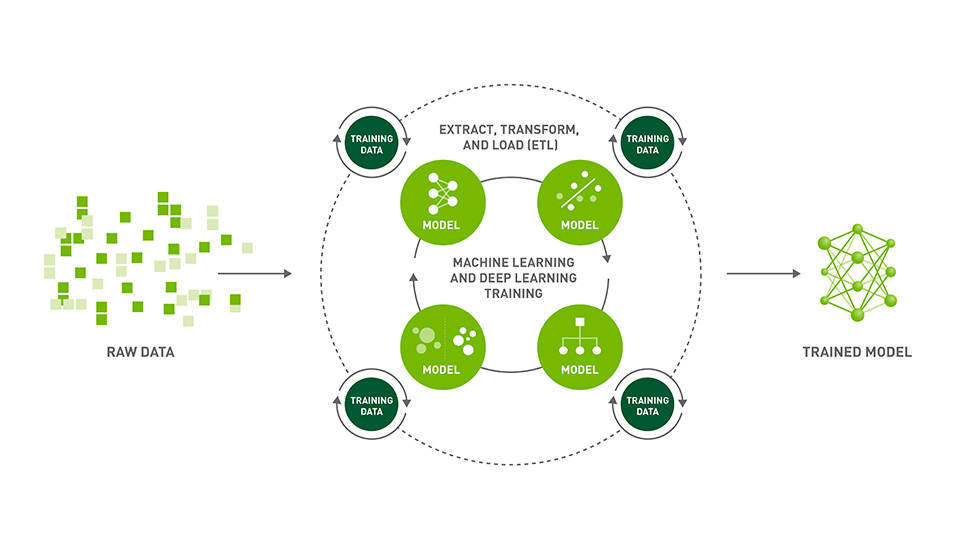

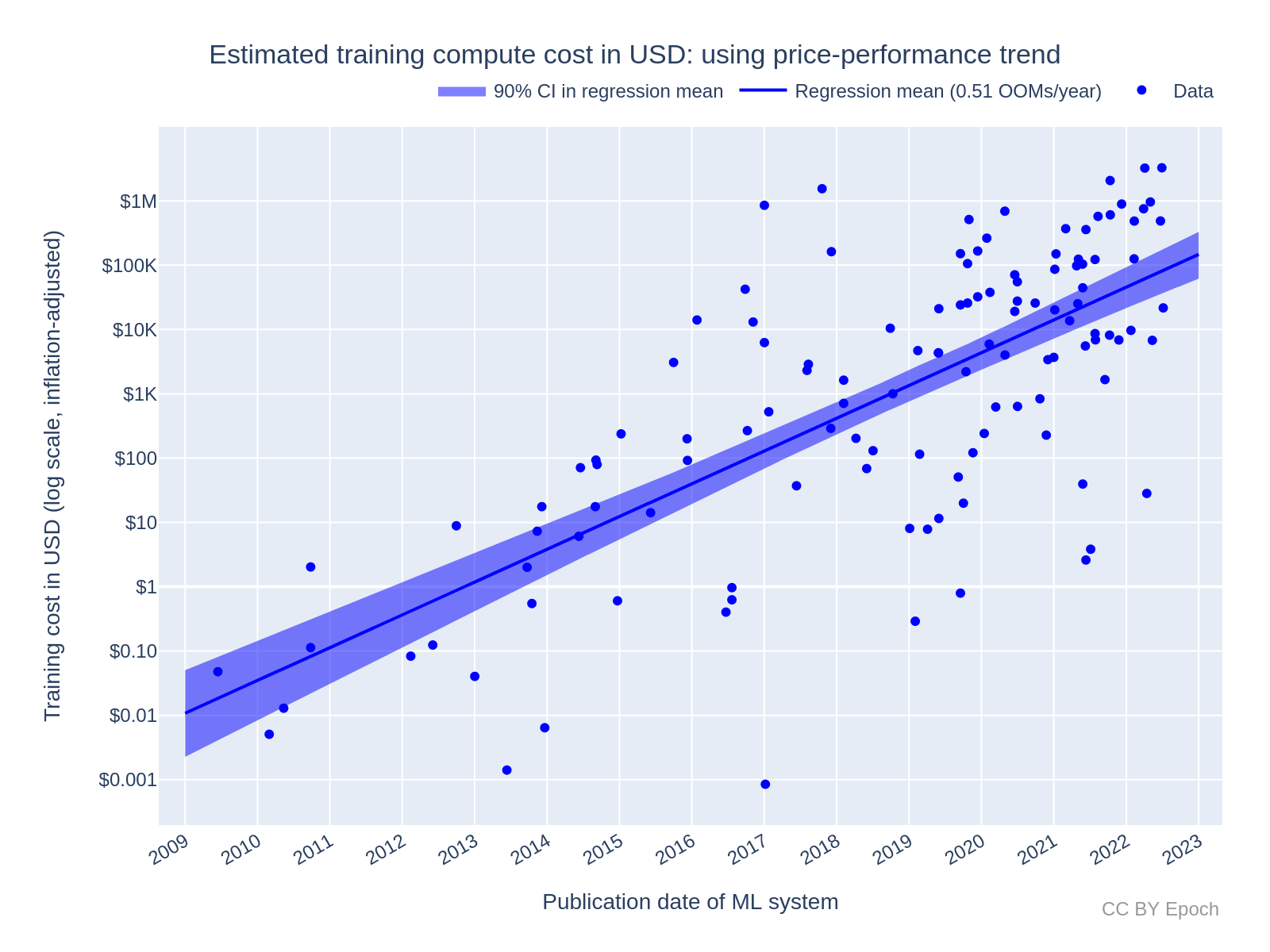

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

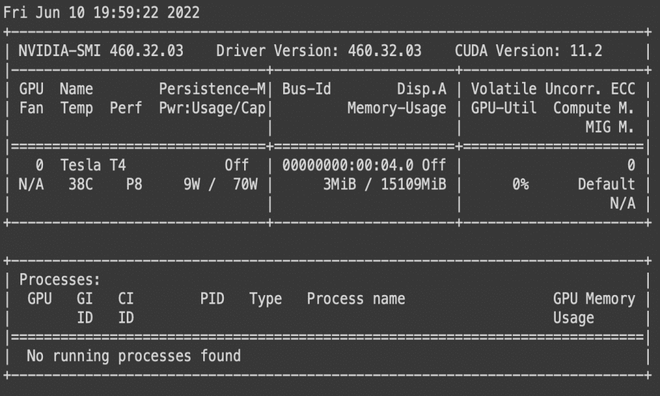

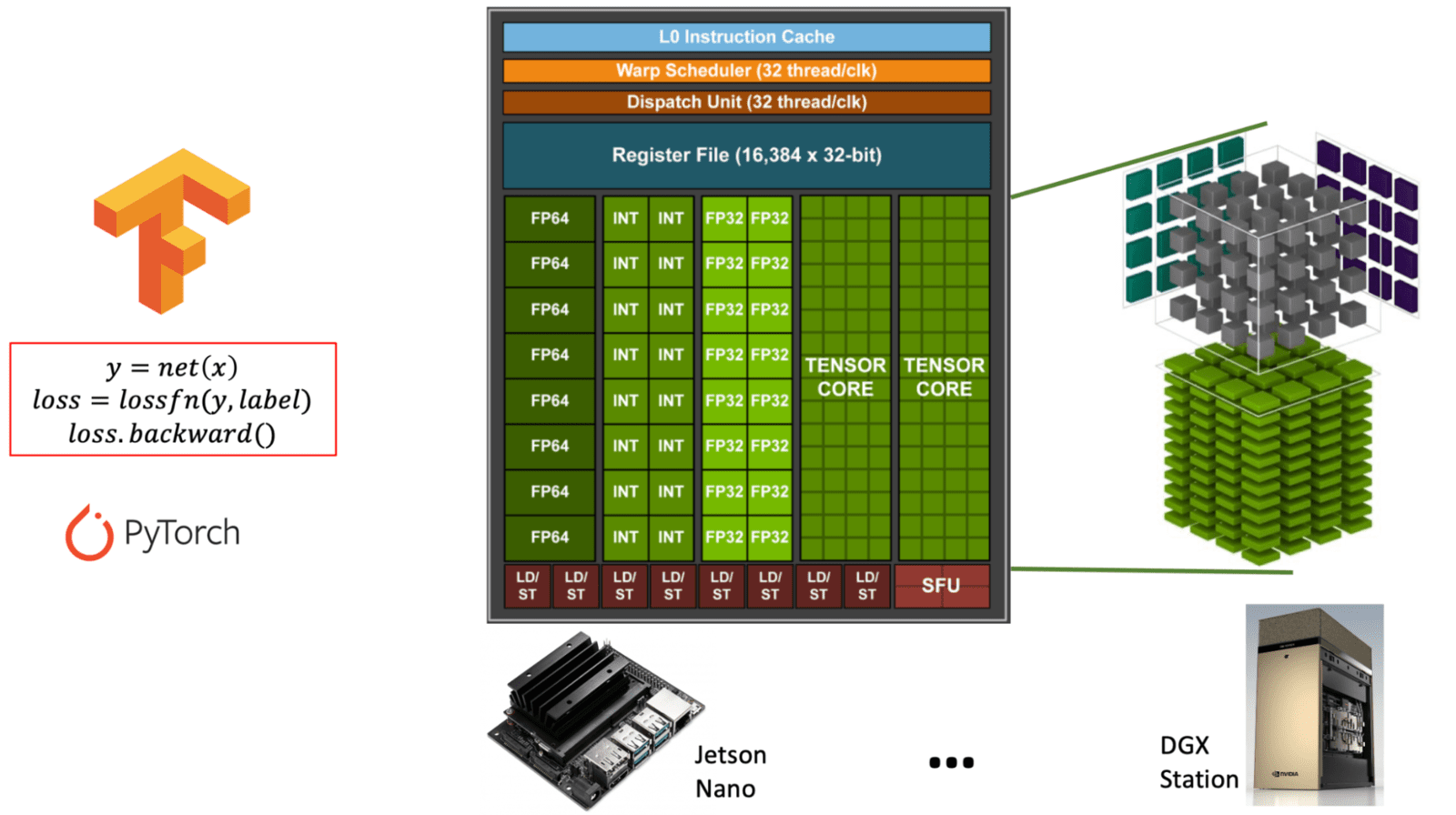

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

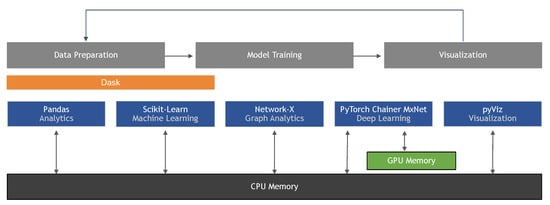

H2O.ai Releases H2O4GPU, the Fastest Collection of GPU Algorithms on the Market, to Expedite Machine Learning in Python | H2O.ai